History of Switching

To understand the importance of current day switches, you need to understand how networks used to work before switches were invented. During mid to late 1980s 10Base2 Ethernet was the dominant 10Mbp/s Ethernet standard. This standard used thin coaxial cables with a maximum length of 185 meters with a maximum of 30 hosts connected to the cable. Hosts were connected to the cable using a T-connector. Most of the hosts at that time were either dumb terminals or early PCs that connected to a mainframe for accessing services.

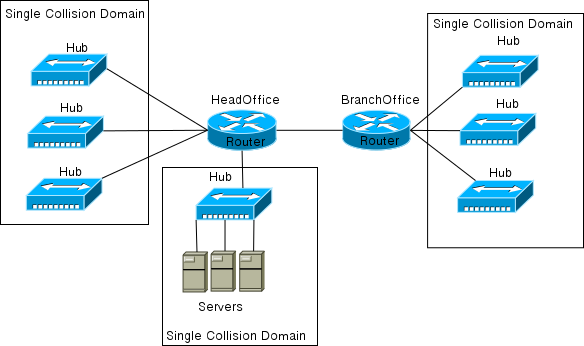

When Novell became very popular in the late 80s and early 90s, NetWare servers replaced the then popular OS/2 and LAN Manager servers. This made Ethernet more popular, because Novell servers used it to communicate between clients and the server. Increasing dependence on Ethernet and the fact that 10Base2 technology was costly and slow lead to rapid development on Ethernet. Hubs were added to networks so that the 10Base5 standard could be used with one host and a Hub port connected on each cable. This led to collapsed backbone networks such as the one shown in Figure 6-1.

As you already know, networks made of only Hubs suffer from problems such as broadcast storms and become slow and sluggish. The networks of late 80s and early 90s suffered from the same problem. Meanwhile, the dependence on networks and services available grew rapidly. The corporate network became huge and very slow since most of the services were available on it and remote offices depended on these services. Segmenting the networks and increasing their bandwidth became a priority. With the introduction of devices called bridges, some segmentation was introduced. Bridges broke up collision domain but were limited by the number of ports available and the fact that they could not do much apart from breaking up the collision domains.

Figure 6-1 Collapsed Backbone Network

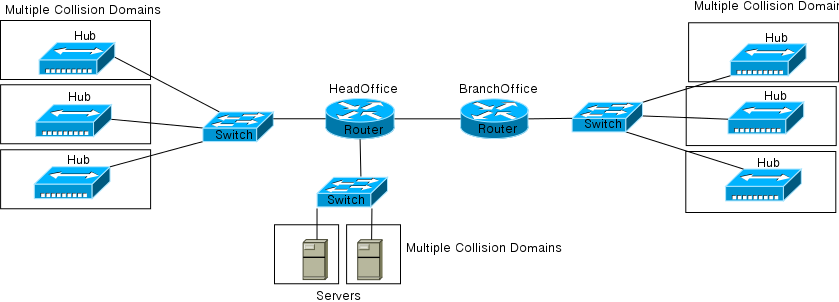

To overcome the limitations of bridges, switches were invented. Switches were multiport bridges that broke the collision domains on each port and could provide many more services than bridges. The problem with the early switches was that they were very costly. This prohibited connecting each individual host to a switch port. So after introduction of switches, the networks came to look like the one shown in Figure 6-2.

Figure 6-2 Early Switched Networks

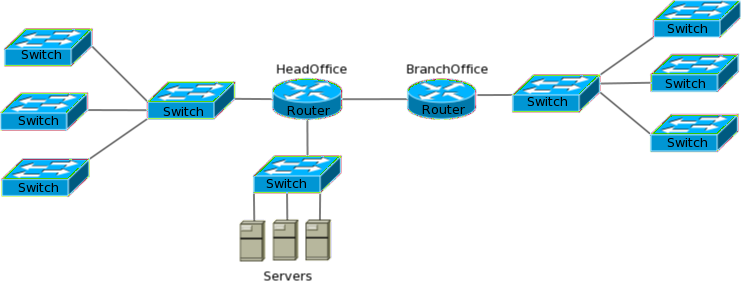

In these networks, each hub was connected to a Switch port. This changed increased the network performance greatly since each hub now had its own collision domain instead of the entire local network being a single collision domain. Such networks, though vastly better than what existed earlier, still forced hosts connected to hubs to share a collision domain. This prevented the networks from attaining their potential. With the drop in prices of switches, this final barrier was also brought down. Cheaper switches meant that each host could finally be connected to a switch port thereby, providing a separate collision domain for each host. Networks came to look like the one shown in Figure 6-3. Such networks practically had no collision.

Figure 6-3 Switched networks

Understanding Switches and their limitations

While switches are multiport bridges, these two devices have a significant difference. Bridges use software to build and maintain the switching and filtering tables while Switches use the hardware – more specifically application specific integrated circuits (ASICs) to build and maintain their tables. Both of these devices provide a dedicated collision domain on each of their ports but switches go a little further by providing the following features:

- Lower latency – Since switches used hardware based briding using ASICs, they work faster than software based bridges.

- Wire Speed – Hardware based switching allows for a near wire speed functionality due to low processing time.

- Low cost – Cost of switches is very low, making cost connecting each host cost very low.

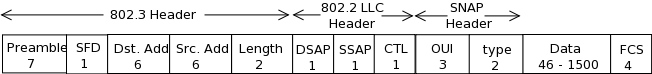

So what makes layer 2 switching this fast and efficient? The fact that layer 2 switching is hardware based and only looks at the hardware address in each frame before deciding on an action. Layer 3 routing on the other needs to look at Layer 3 header information before making a decision. You will remember from chapter 1 that the destination MAC address starts from the 9th byte of a packet and is 6 bytes long. So the switch only has to read 14 bytes when a frame is received. Figure 6-4 shows a frame.

Figure 6-4 Ethernet Frame

Overall, using switches to segment networks and provide connectivity to hosts results in very fast and efficient network with each host getting the full bandwidth.

While switches increase the efficiency of the network, they still have the limitations discussed below:

- While switches break collision domains, they do not break broadcast domains. The entire layer 2 network still remains a single broadcast domain. This makes the network susceptible to broadcast storms and related problems. Routers have to be used to break the broadcast domains.

- When redundancy is introduced in the switched network, the possibility of loops becomes very high. Dedicated protocols need to be run to ensure that the network remains loop free. This increases burden on the switches. The convergence time of these protocols is also a concern since the network will not be useable during convergence.

Due to the above limitations, routers cannot be eliminated from the network. To design a good switched or bridged network, the following two important points must be considered:

- Collision domain should be broken as much as possible.

- The users should spend 80 percent of their time on the local segment.

Bridging vs switching

While switches are just multiport bridges, there are many differences between them:

- Bridges are software based while switches are hardware based since they use ASICs for building and maintaining their tables.

- Switches have higher number of ports than bridges

- Bridges have a single spanning tree instance while switches can be multiple instances. (Spanning tree will be covered later in the chapter).

While different in some aspects, switches and bridges share the following characteristics:

- Both look at hardware address of the frame to make a decision.

- Both learn MAC address from frames received.

- Both forward layer two broadcasts.

Three functions of a switch

A switch at layer 2 has the following three distinct functions:

- Learning MAC addresses

- Filtering and forwarding frames

- Preventing loops on the network

It is important to understand and remember each of these three functions. The following sections explain these three functions in depth.

Learning MAC Addresses

When a switch is first powered up it is not aware of the location of any host on the network. In a very short time, as hosts transmit data to other hosts, it learns the MAC address from the received frame and remembers which hosts are connected to which port.

If the switch receives a frame destined to an unknown address, it will send a broadcast message out of each port except the port that the request was received on, and then when the switch receives a reply it will add the address and source port to its database. When another frame destined to this address is received, the switch does not need to send a broadcast since it already knows where the destination address is located.

You can see how switches differ from hubs. A hub will never remember which hosts are connected to which ports and will always flood traffic out of each and every port.

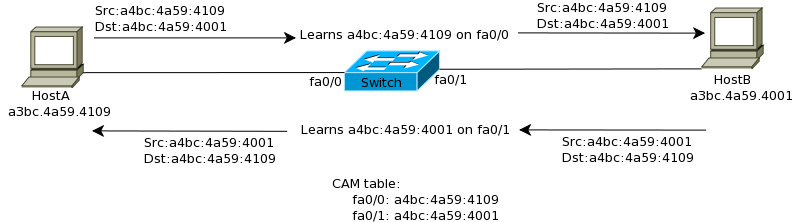

The table in which the addresses are stored is known as CAM (Content-addressable memory) table. To further understand how the switch populates the CAM table, consider the following example:

- A switch boots up and has an empty CAM table.

- HostA with a MAC address of a3bc.4a59.4109 sends a frame to HostB whose address is a3bc.4a59.4001.

- The switch receives the frame on interface fa0/1 and saves the MAC address of HostA (a3bc.4a59.4109) in its CAM table and associates it with interface fa0/1.

- Since the destination address is not known, the switch will broadcast the frame out all interfaces except fa0/1.

- HostB receives the frame and replies back.

- The switch receives the reply on interface fa0/2 and saves the MAC address of HostB (a3bc.4a59.4001) in its CAM table and associates it with interface fa0/2.

- The switch forwards the frame out interface fa0/1 since the destination MAC address (a3bc.4a59.4109) is present in the CAM table and associated with interface fa0/1.

- HostA replies back to HostB.

- The switch receives the frame and forwards it out interface fa0/2 because it has the destination MAC address (a3bc.4a59.4001) associated with interface fa0/2 in the CAM table.

The above exchange is illustrated in Figure 6-5.

Figure 6-5 Switch learning MAC addresses

The switch will store a MAC addresses in the CAM table for a limited amount of time. If no traffic is heard from that port for a predefined period of time then the entry is purged from memory. This is to free up memory space on the switch and also prevent entries from becoming out of date and inaccurate. This time is known as the MAC address aging time. On Cisco 2950 this time is 300 seconds by default and can be configured to be between 10 and 1000000 seconds. The switch can also be configured so as to not purge the addresses ever.

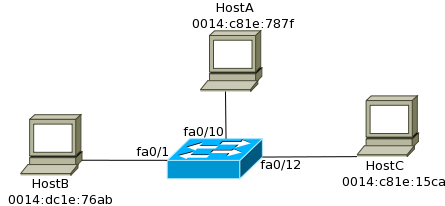

The command to see the CAM table of a Switch is “show mac address-table”. Here is an example of how the CAM table of a Switch:

Vlan Mac Address Type Ports

—- —————– ——– ——

1 0014.bc1e.76ab DYNAMIC Fa0/1

1 0014.c81e.787f DYNAMIC Fa0/10

1 0014.c81e.15ca DYNAMIC Fa0/12

The CAM table shown above will be created for the network shown in Figure 6-6.

Note that the switch stores the Mac Address and the Port where the host is connected. Do not worry about the VLAN column at the moment; we will cover this in chapter 7.

Figure 6-6 CAM table

Filtering and Forwarding frames

When a frame arrives at a switch port, the switch examines its database of MAC addresses. If the destination address is in the database the frame will only be sent out of the interface the destination host is attached to. This process is known as frame filtering. Frame filtering helps preserve the bandwidth since the frame is only sent out the interface on which the destination MAC address is connected. This also adds a layer of security since no other host will ever receive the frame.

On the other hand, if the switch does not know the destination MAC address, it will flood the frame out all active interfaces expect the interface where the frame was received on. Another situation where the switch will flood out a frame is when a host sends a broadcast message. Remember that a switched network is a single broadcast domain.

Let’s take two examples to understand frame filtering. A switch’s CAM table is shown below:

Vlan Mac Address Type Ports

—- —————– ——– ——

1 0014.bc1e.76ab DYNAMIC Fa0/1

1 0014.c81e.787f DYNAMIC Fa0/10

1 0014.c81e.15ca DYNAMIC Fa0/12

When it receives a frame from fa0/1 destined for a host with MAC address of 0014.c8ef.19fa, what will the switch do? Since the address is not known, switch will flood out the frame out all active interfaces except fa0/1. If a response is received from the destination host, the MAC address will be added to the CAM table.

In another example, if a host with MAC address of 0014.c8ef.20ae, connected to interface fa0/11 sends a frame destined to 0014.bc1e.76ab, the switch will add the source address to its CAM table and associate it with fa0/11. It will then forward the frame out fa0/1 since the destination address exists in the CAM table and is associated with fa0/1.

The CAM table of the switch will now look like the following:

Vlan Mac Address Type Ports

—- —————– ——– ——

1 0014.bc1e.76ab DYNAMIC Fa0/1

1 0014.c81e.787f DYNAMIC Fa0/10

1 0014.c81e.15ca DYNAMIC Fa0/12

1 0014.c8ef.19fa DYNAMIC Fa0/15

1 0014.c8ef.20ae DYNAMIC Fa0/11

Switching Methods

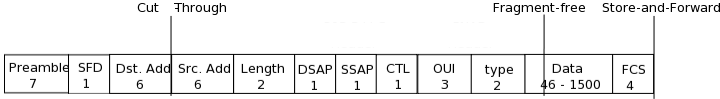

Any delay in passing traffic is known as latency. Cisco switches offer three ways to switch the traffic depending upon how thoroughly you want the frame to be checked before it is passed on. The more checking you want the more latency you will introduce to the switch.

The three switching modes to choose from are:

- Cut through

- Store-and-forward

- Fragment-free

Cut-through

Cut-through switching is the fastest switching method meaning it has the lowest latency. The incoming frame is read up to the destination MAC address. Once it reaches the destination MAC address, the switch then checks its CAM table for the correct port to forward the frame out of and sends it on its way. There is no error checking so this method gives you the lowest latency. The price however is that the switch will forward any frames containing errors.

The process of switching modes can best be described by using a metaphor.

You are the security at a club and are asked to make sure that everyone who enters has a picture ID. You are not asked to make sure the picture matches the person, only that the ID has a picture. With this method of checking, people are surely going to move quickly to enter the establishment. This is how cut-through switching works.

Store-and-forward

Here the switch reads the entire frame and copies it into its buffers. A cyclic redundancy check (CRC) takes place to check the frame for any errors. If errors are found the frame is dropped otherwise the switching table is examined and the frame forwarded. Store and Forward ensures that the frame is at least 64 bytes and no larger than 1518 bytes. If smaller than 64 bytes or larger than 1518 bytes then the switch will discard the frame.

Now imagine you are the security at the club, only this time you have to not only make sure that the picture matches the person, but you must also write down the name and address of everyone before they can enter. Doing it this way causes a great deal of time and delay and this is how the store-and-forward method of switching works.

Store-and-forward switching has the highest latency of all switching methods and is the default setting of the 2900 series switches.

Fragment-free (modified cut-through/runt-free)

Since cut-through can ensure that all frames are good and store-and-forward takes too long, we need a method that is both quick and reliable. Using our example of the nightclub security, imagine you are asked to make sure that everyone has an ID and that the picture matches the person. With this method you have made sure everyone is who they say they are, but you do not have to take down all the information. In switching we accomplish this by using the fragment-free method of switching.

This is the default configuration on lower level Cisco switches. Fragment-free, or modified cut-through, is a modified variety of cut-through switching. The first 64 bytes of a frame are examined for any errors, and if none are detected, it will pass it. The reason for this is that if there is an error in the frame it is most likely to be in the first 64 bytes.

The minimum size of an Ethernet frame is 64 bytes; anything less than 64 bytes is called a “runt” frame. Since every frame must be at least 64 bytes before forwarding, this will eliminate the runts, and that is why this method is also known as “runt-free” switching.

The figure below shows which method reads how much of a frame before forwarding it.

Different Switching methods

Preventing loops in the network

Having redundant links between switches can be very useful. If one path breaks, the traffic can take an alternative path. Though redundant paths are extremely useful, they often cause a lot of problems. Some of the problems associated with such loops are broadcast storms, endless looping, duplicate frames and faulty CAM tables. Let’s take a look at each of these problems in detail:

- Broadcast Storms – Without loop avoidance techniques in place, switches can endlessly flood a broadcast in the network. To understand how this can happen, consider the network shown in Figure 6-7.

Figure 6-7 Broadcast Storms

In the network shown in Figure 6-7, consider a situation where HostA send out a broadcast. The following sequence of events will then happen:

- SwitchA will forward the frame out all interface except the one connected to HostA. HostB will receive a copy of this broadcast. Notice that the frame would have gone out of interface fa0/1 and fa0/2 also. For ease of understanding lets call the frame going out of fa0/1 as frame1 while the frame going out of fa0/2 frame2.

- When SwitchB receives frame1, it will flood it out all interfaces including fa0/2. When it receives frame2, it will flood it out all interfaces including fa0/1. HostC and HostD would receive both the frames, which actually means they receive two copies of the same frame. Meanwhile one frame was each sent out fa0/2 and fa0/1 towards SwitchA! Let’s call these frames frame3 and frame4.

- When SwitchA receives frame3, it will flood it out all interfaces including fa0/1 and when it receives frame4 it will flood it out all interface including fa0/2. This means, HostB and HostA both receive two broadcasts. Remember that HostA was the original source of the broadcast while HostB has already receive one copy! But the worse part is that two more frames went out to SwitchB. Now the previous and the current step will continue endlessly and the four hosts will be continuously get the broadcast.

If multiple broadcasts are sent out to this network, each of them will endlessly be sent to every host in the network thereby causing what is known as a broadcast storm.

- Endless looping – Similar to what happens in a broadcast storm, consider a situation where HostA in Figure 6-7 sends a unicast destined to a host which does not exist in the network. SwitchA will receive the frame and will see that it does not know the destination address. It will forward it out all interface except the one where HostA is connected. SwitchB will receive two copies of this frame and will flood them out all its interfaces since it does not know the destination address. Since SwitchB will flood the frames out fa0/1 and fa0/2, SwitchA will receive the frames and the endless loop will continue.

- Duplicate frames – In the network shown in Figure 6-7, consider a situation where HostA sends a frame destined to HostD. When switch A receives this frame, it will not know where HostD is and will flood it out all the interfaces. SwitchB will receive one copy each from both fa0/1 and fa0/2 interfaces. It will check the destination address and send both the packets to HostD. In effect, HostD would have received a duplicate packet. This might cause problems with protocols using UDP and especially with voice packets.

- Faulty CAM table – Consider the situation where HostA sends a frame destined to HostC. When SwitchA receives the frame, it does not know where HostC resides, so it will flood out the frame. SwitchB will receive the frame on both fa0/1 and fa0/2. It will read the source address and store it in its CAM table. Now it has two destination interfaces for a single address! Now a switch cannot have two entries for a single address, so it will keep overwriting each entry with new information as frames are received on multiple interfaces. This can cause the switch to get overwhelmed and it might stop forwarding traffic.

All of these problems can cause a switched network to come crashing down. They should be entirely avoided or at least fixed. Hence, the Spanning Tree Protocol was created to keep the network loop free. We will be discussing STP shortly.